Spring AI and Challenges with Function Calling

Spring AI boosts developer’s productivity by providing seamless integration with a variety of AI models. This post explores enriching the generic model with additional data coming from custom functions. This technique known as function calling allows to elegantly tap into a variety of external data sources. However, it comes with its own challenges and considerations.

Table of Contents

- What is Spring AI

- Function Calling with Spring AI

- The Good

- The Bad

- The Ugly

- Reducing Response Times

- Summary

What is Spring AI

Spring AI is, first and foremost, a productivity tool.

The

Spring AIproject aims to streamline the development of applications that incorporate artificial intelligence functionality without unnecessary complexity.Source: Spring AI documentation

Disclaimer: This post is not an introduction to Spring AI. There are many other articles that do a great job in this regard. Spring AI primer provides a great starting point to using the framework.

The complete source code of all examples mentioned in this post is available on GitHub.

The framework balances abstraction of key AI concepts with the ability to engage low-level APIs, offering a more granular control. Its Java SDK enables to employ various AI models with ease, addressing key use cases like prompt templates and multimodality. Overall, the tool establishes a coherent structure for model requests and responses and provides support for Retrieval-Augmented Generation (RAG) through data retrieval either via direct function calls or by accessing a vector database. Spring AI is equipped with an ETL pipeline for additional processing of the retrieved information.

Retrieval Augmented Generation (RAG) brings the advantage of enriching AI model responses with additional information the model on its own doesn’t have. Pulling data from a database, or an external API is a common practice allowing to enhance model capabilities without the need for extensive retraining.

Let’s take a closer look at one of the retrieval mechanisms within the RAG paradigm supported by Spring AI – function calling.

Function Calling with Spring AI

Many AI models support a concept generally referred to as function calling. Consider the typical interaction with a model comprising the following parts:

- A prompt: A question or a challenge presented to the model.

- The model: A large language model capable of providing a human-like answer.

- An external data source: A service or a database that provides contextual data for what the user is asking.

When you ask a generic model a specific question, what are the odds of getting a right answer? You guessed it right – they’re negligible. What can you do about it?

An intuitive solution leverages several prompts. Your initial prompt would capture and classify the user intent. Based on what the user wants, your application would fetch some specific data from a database or it would consult an external service. Equipped with the right information, you could feed it into another prompt that would generate the ultimate answer. This kind of works, but juggling several prompts is cumbersome and error prone.

A smarter approach is enhancing the model’s capability to adjust the prompt, which is exactly what several established providers have done:

You can describe functions and have the model intelligently choose to output a JSON object containing arguments to call the functions. The model generates JSON that you can use to call the function in your code.

(shortened)Source: Open AI

The model alone won’t invoke the defined function, but a framework like Spring will ensure the function call is executed and the result is compiled into the model’s response. All of this happens behind the scenes.

The Good

Spring AI offers a relatively straightforward approach to plugging your own function into the conversation between the user and the model. There are several approaches, here’s the one I found the most useful.

Suppose a simple question: What's the temperature in {your favourite location}? . For the model to arrive at the right answer, it needs to consult an external weather service. Here’s one of many possible implementations of such a service:

interface WeatherService {

fun forecast(request: WeatherRequest): WeatherResponse

}

data class WeatherRequest(val location: String)

sealed interface WeatherResponse {

data class Success(

val temp: Double,

val unit: TempUnit

) : WeatherResponse

data class Failure(val reason: String) : WeatherResponse

}

enum class TempUnit {

CELSIUS,

FAHRENHEIT

}As you can imagine, an implementation of such a service would reach out to an external weather API to find out about the current temperature of the requested location. Skipping this boring detail, let me move onto the next part, which is plugging the function call in the model.

First, you need a wrapper around your custom function:

import java.util.function.Function

class WeatherServiceFunction(

private val weatherService: WeatherService,

) : Function<WeatherRequest, WeatherResponse> {

override fun apply(request: WeatherRequest): WeatherResponse {

return weatherService.forecast(request)

}

}Next, register the wrapper as a Spring bean:

@Bean

fun currentWeatherFunction(service: WeatherService): FunctionCallback {

return FunctionCallbackWrapper

.builder(WeatherServiceFunction(service))

.withName("CurrentWeather")

.withDescription("Get current weather forecast")

.build()

}The description helps the model understand the purpose of the function, ensuring it’ll get called at the right time. The function name serves as a reference in your chat service:

import org.springframework.ai.chat.prompt.Prompt

import org.springframework.ai.openai.OpenAiChatModel

import org.springframework.ai.openai.OpenAiChatOptions

sealed interface ServiceResponse<out T> {

data class Success<T>(val data: T) : ServiceResponse<T>

data class Failure(val reason: String) : ServiceResponse<Nothing>

}

@Service

class ChatService(

private val model: OpenAiChatModel

) {

private val logger = LoggerFactory.getLogger(this::class.java)

fun getResponse(message: String): ServiceResponse<String> {

return try {

val response = model.call(

Prompt(

message,

OpenAiChatOptions.builder()

.withFunction("CurrentWeather")

.build()

)

)?.result?.output?.content ?: ""

ServiceResponse.Success(response)

} catch (e: Exception) {

logger.error("Failed to get response", e)

ServiceResponse.Failure("Sorry, I can't help you right now")

}

}

}

CurrentWeather identifies our custom function that’s now weaved right into the prompt. When model.call is done it will provide a meaningful response enriched with information from a weather forecast. Here’s an example response: The current temperature in London is 20.0°C.

With a little a bit of plumbing we’re now able to add a custom function (or several) and the framework makes sure it gets called when it makes sense. This is very cool. However, not all is hunky-dory.

The Bad

What if your function takes time to complete? This is perfectly plausible. Especially, when it relies on an external weather forecast service.

Well, your options are somewhat limited since the framework only supports blocking calls through the Java Function interface:

@FunctionalInterface

public interface Function<T, R> {

R apply(T t);

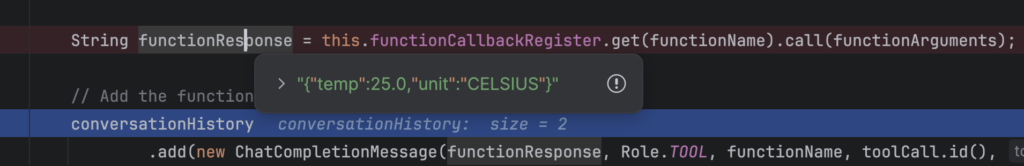

}Eventually, the registered function is invoked via the Spring’s Java SDK – in the respective model implementation (Open AI in my case). The response object is serialized as JSON and added to the final response:

OpenAiChatModel your custom function is expected to return data right of the bat.If your function takes ten seconds to complete, then the user has to wait at least this long to see the response.

You might wonder if streaming makes a difference:

model.stream(

Prompt(

message,

OpenAiChatOptions.builder()

.withFunction("CurrentWeather")

.build()

)

).mapNotNull<ServiceResponse<String>> {

it?.result?.output?.content?.let { content ->

ServiceResponse.Success(content)

}

}.onErrorResume { e ->

Flux.just(ServiceResponse.Failure("Sorry, I can't help you right now"))

}Turns out, that even with streaming the model initially blocks waiting for all of the registered functions to complete. Again, the user won’t see any outcome until all functions yield their results, providing a complete set of contextual information to the model.

The Ugly

Spring AI uses Java. This is not a problem, unless you switch to streaming of the model responses. Streaming is a technique where the model sends its response chunk by chunk instead of all at once, as a wall of text. This improves perceived responsiveness and makes the conversation flow look more natural.

Aesthetics aside, Spring AI implements streaming via WebFlux. Granted, it’s far less elegant compared to Kotlin coroutines. Of course, you can switch to coroutines thanks to the support for Reactor in Kotlin. However, you’ll have to deal with intricacies of converting Flux to Flow , meticulously testing that streaming continues to work.

Long story short, Spring AI’s Java SDK has quirks and limitations that require additional effort on your end if you want to make an efficient use of Kotlin.

Reducing Response Times

Is there a solution that prevents timeouts due to blocking function calls? Well, ideally we don’t make (m)any external calls with unpredictable response times. Instead, we can read enrichment data from a database or a vector database, which is what my next blog post is about. Stay tuned 😉

When dealing with a function call, however, we can – if it makes sense – spin up several parallel requests, await the first available response, cancel all of the remaining requests and return the fastest response. This is a perfect task for Kotlin coroutines.

Our function calls are blocking in nature, and we cannot change that. However, internally we can leverage the concept of CompletableFuture and combine it with coroutines. Let’s expand on our example of a weather service.

First, let’s make our WeatherService call asynchronous:

interface WeatherService {

suspend fun forecast(request: WeatherRequest): WeatherResponse

}Next, in the forecast implementation we make two parallel requests to two separate weather services, return the faster response and discard the pending request:

import kotlinx.coroutines.*

import kotlinx.coroutines.selects.select

override suspend fun forecast(request: WeatherRequest): WeatherResponse =

coroutineScope {

val jobs = listOf(

async { callSlowWeatherService(request) },

async { callFastWeatherService(request) }

)

val result = select {

jobs.forEach { job -> job.onAwait { job } }

}.also {

coroutineContext.cancelChildren()

}

result.await()

}Do you want to know more about this pattern? This post explains it in depth.

Finally, let’s handle the async call within the functional wrapper:

class WeatherServiceFunction(

private val weatherService: WeatherService,

) : Function<WeatherRequest, WeatherResponse> {

private val jobScope: CoroutineScope

get() = CoroutineScope(Job() + Dispatchers.IO)

// This is a blocking call

override fun apply(request: WeatherRequest): WeatherResponse {

val future = CompletableFuture<WeatherResponse>()

jobScope.launch {

try {

val response = weatherService.forecast(request)

future.complete(response)

} catch (e: Exception) {

future.complete(

WeatherResponse.Failure(e.message ?: "Unknown error")

)

}

}

// Block until the future is complete

return future.get()

}

}Bear in mind, this is just one of several strategies we could try to reduce response times. The takeaway here is that we can internally leverage non-blocking operations using futures and coroutines.

Summary

In summary, Spring AI champions developer productivity by seamlessly integrating with various AI models. Many common scenarios are supported out of the box. I find the SDK intuitive and well documented.

On the downside, there’s no concept of asynchronicity when using function calls with AI models. I can imagine the model could provide an incomplete response – giving the user a chance to continue the conversation while waiting for data processing in the background. Chatbots maintain conversational context. It’s not too far fetched to envision a bot dropping a message in the middle of a seemingly unrelated conversation, saying something along the lines: “Apropos, here’s the information you asked me about two minutes ago”. I acknowledge this is probably much harder to get right in Spring or any other framework.

Please let me know in the comment section below your thoughts on the subject. Stay tuned for my next post where I’ll delve into enriching your AI model with data loaded from a vector database.

The complete source code of all examples mentioned in this post is available on GitHub.

Thanks for reading.